Comprehending Degrees of Freedom:

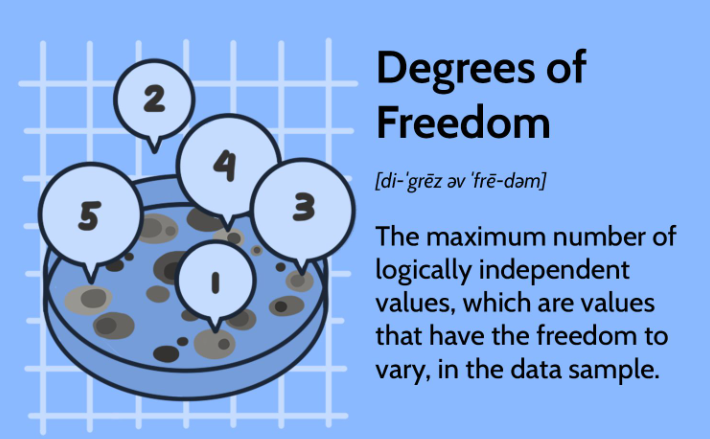

How To Find Degrees Of Freedom? In statistics, degrees of freedom (DF), which stand for independent variables in a study, are essential. Degrees of freedom show the amount of variability that analysts can exploit without violating limitations for estimating or testing hypotheses. To account for the estimation of the sample mean in a t-test, for example, statisticians compute degrees of freedom as the sample size minus one. DF denotes the effective data points for parameter estimation in regression. In order to provide reliable conclusions from hypothesis testing, DF are essential in defining key values. In general, understanding DF helps with strong statistical inference and well-informed decision-making by illuminating the flexibility and limits of data.

Methods of Calculation:

Researchers calculate degrees of freedom (DF) using different techniques based on the type of statistical analysis being conducted. There are various formulas for calculating DF for different tests and models, each one relevant to the features of the investigation. In computing the DF for a t-test, for instance, analysts typically utilize the total number of observations minus one. Group sizes, model complexity, and the number of estimated parameters are all taken into account during the computation of more intricate analyses such as ANOVA or regression. It is essential to comprehend these computation techniques in order to perform reliable statistical inference and hypothesis testing. This section will examine the numerous ways to calculate degrees of freedom for various statistical tests and models, with step-by-step examples to help with understanding.

Methods of Calculation:

Different techniques are used to calculate degrees of freedom (DF) based on the type of statistical analysis being performed. There are various formulas for calculating DF for different tests and models, each one relevant to the features of the investigation. In computing the DF for a t-test, for instance, analysts typically use the total number of observations minus one. Group sizes, model complexity, and the number of estimated parameters are all taken into account during the computation of more intricate analyses such as ANOVA or regression. It is essential to comprehend these computation techniques in order to perform reliable statistical inference and hypothesis testing. This section will examine the numerous ways to calculate degrees of freedom for various statistical tests and models, with step-by-step examples to help with understanding.

T-test Degrees of Freedom:

When t-tests are utilized to compare the means of two groups, they are an essential tool for analyzing DF. The sample size less one determines the DF in one-sample t-tests. When performing independent samples t-tests, DF is determined using a formula that takes into account the sample sizes of both groups. In addition to comparing means from the same group under various circumstances, paired samples t-tests additionally require particular calculations for DF because of the paired character of the data. Accurately interpreting the results and choosing the right critical values for hypothesis testing require an understanding of how DF are computed in various t-test types. This section will examine how DF is calculated in different t-tests and provide practical examples to illustrate their application.

Degrees of Freedom in ANOVA:

1. Between-Group Variability:

- Discusses how the degrees of freedom are calculated based on the variability between different groups in the analysis.

- Explains the concept of group means and their impact on degrees of freedom.

2. Within-Group Variability:

- Explores the calculation of degrees of freedom considering the variability within each group.

- Discusses how the sample sizes within groups affect the degrees of freedom.

3. One-Way ANOVA:

- Provides a detailed explanation of how degrees of freedom are determined in a one-way ANOVA.

- Offers step-by-step examples illustrating the calculation process.

4. Two-Way ANOVA:

- Expands on the calculation of degrees of freedom in a two-way ANOVA, considering multiple factors influencing the variability.

- Discusses interactions between factors and their implications for degrees of freedom.

5. Interpretation and Application:

- Summarizes the importance of degrees of freedom in ANOVA analyses.

- Provides insights into interpreting ANOVA results based on degrees of freedom and understanding the significance of F-ratios.

Degrees of Freedom in Chi-Square Tests:

Chi-Square Test for Independence:

- Discusses how degrees of freedom are determined in chi-square tests assessing the independence of categorical variables.

- Explains the formula for calculating degrees of freedom based on the number of categories in the variables.

Chi-Square Test for Goodness-of-Fit:

- Explores the calculation of degrees of freedom in chi-square tests used to assess the fit between observed and expected frequencies.

- Discusses how the degrees of freedom are influenced by the number of categories and the constraints imposed by the expected frequencies.

Examples and Applications:

- Provides practical examples illustrating the calculation of degrees of freedom in chi-square tests.

- Discusses real-world applications of chi-square tests and their interpretation based on degrees of freedom.

Regression analysis applications:

In regression analysis, degrees of freedom (DF) represent the actual number of independent data points that can be used to estimate model parameters. DF are essential for evaluating predictor significance and model fit. As the number of predictors rises, the degrees of freedom decrease because more parameters must be estimated. This decrease in degrees of freedom impacts the accuracy of coefficient estimations and the dependability of model predictions. Researchers can ensure reliable and understandable results by balancing the complexity of the model with the data at hand by having a solid understanding of DF in regression analysis. Furthermore, DF have an impact on model selection procedures, helping researchers select the best regression model for their data and research concerns.

Degrees of Freedom in Experimental Design:

Definition:

Represents the number of independent pieces of information available for estimating parameters or testing hypotheses in an experimental design.

Calculation:

- Depends on the design’s complexity, such as the number of groups, observations, and levels within factors.

- Different designs have distinct formulas for determining degrees of freedom.

One-Way ANOVA:

- Considers variability between and within groups.

- DF_between = k – 1, DF_within = N – k, Total DF = DF_between + DF_within.

Factorial Design:

- Involves multiple factors with varying levels.

- DF_total = N – 1, DF_factors calculated based on levels within factors.

Repeated Measures Design:

- Accounts for within-subject variability.

- DF_subjects = n – 1, DF_within = (N – 1) – DF_subjects, Total DF = DF_subjects + DF_within.

Degrees of Freedom in Non-parametric Tests:

1. Effective Degrees of Freedom:

- Discusses the concept of effective degrees of freedom in non-parametric tests, accounting for the complexity of the data and potential dependencies.

- Explores how sample size and data distribution influence the calculation of effective degrees of freedom.

2. Wilcoxon Signed-Rank Test:

- Explains how degrees of freedom are determined in the Wilcoxon signed-rank test, a non-parametric test for paired data.

- Provides a step-by-step explanation of the calculation process, considering the number of observations and ties.

3. Kruskal-Wallis Test:

- Discusses the calculation of degrees of freedom in the Kruskal-Wallis test, a non-parametric alternative to one-way ANOVA.

- Explores how group sizes and the number of groups impact the degrees of freedom calculation.

4. Mann-Whitney U Test:

- Expands on the determination of degrees of freedom in the Mann-Whitney U test, used for comparing two independent samples.

- Discusses the formula for calculating degrees of freedom and its interpretation in the context of the test.

5. Examples and Applications:

- Provides practical examples illustrating the calculation of degrees of freedom in various non-parametric tests.

- Discusses real-world applications of non-parametric tests and the interpretation of results based on degrees of freedom.

Degrees of Freedom in Structural Equation Modeling (SEM):

Calculation Method:

- Explains how degrees of freedom are calculated in SEM, considering the complexity of the model and the sample size.

- Discusses the formula for determining degrees of freedom in SEM and its significance in model evaluation.

Model Complexity:

- Explores how the number of parameters estimated in the SEM affects the degrees of freedom.

- Discusses the trade-off between model complexity and degrees of freedom, emphasizing the need for parsimony.

Sample Size Considerations:

- Examines the impact of sample size on degrees of freedom in SEM.

- Discusses guidelines for ensuring an adequate sample size to support the estimation of parameters and model fit assessment.

Interpretation of Results:

- Provides insights into interpreting SEM results based on degrees of freedom.

- Discusses how degrees of freedom influence model fit indices and statistical significance tests.

Practical Applications:

- Offers practical examples illustrating the calculation and interpretation of degrees of freedom in SEM.

- Discusses real-world applications of SEM and the importance of considering degrees of freedom in model estimation and evaluation.

Practical Considerations and Limitations:

Sample Size Requirements:

- Discusses the impact of sample size on degrees of freedom and statistical power.

- Provides guidelines for determining adequate sample sizes to ensure reliable estimation and inference.

Assumptions of Statistical Tests:

- Explores how violations of assumptions, such as normality or homoscedasticity, can affect degrees of freedom.

- Discusses strategies for addressing violations and ensuring the validity of statistical analyses.

Model Complexity vs. Degrees of Freedom:

- Considers the trade-off between model complexity and degrees of freedom in regression and SEM.

- Highlights the importance of balancing model fit with parsimony to avoid overfitting.

Effect on Statistical Inference:

- Discusses how degrees of freedom impact the interpretation of test statistics and p-values.

- Emphasizes the need to consider degrees of freedom when drawing conclusions from statistical analyses.

Limitations of Degrees of Freedom:

- Acknowledges the limitations of degrees of freedom in certain statistical methods, such as non-parametric tests.

- Discusses alternative approaches for addressing limitations and ensuring robust statistical inference.

In summary

A key idea in statistics, degrees of freedom (DF) affect the validity and dependability of statistical analyses using a variety of approaches. Comprehending DF facilitates enhanced interpretations and well-informed decision-making in statistical inference by offering insights into the restrictions and flexibility present in data. DF are essential for choosing acceptable critical values, evaluating model fit, and deciphering data in a variety of fields, including hypothesis testing, regression analysis, experimental design, and structural equation modeling. Researchers may efficiently negotiate the difficulties of data fusion (DF) by taking into account practical concerns and restrictions. This ensures solid statistical analyses and meaningful results that enhance knowledge in their particular domains.

FAQs (Frequently Asked Questions)

What are degrees of freedom (DF) in statistics?

DF represent the number of independent values in a statistical analysis.

Why are degrees of freedom important?

They determine critical values, assess model fit, and ensure valid statistical inferences.

How are degrees of freedom calculated?

Calculation varies by analysis; e.g., in t-tests, it’s sample size minus one.

What is the significance of degrees of freedom in ANOVA?

They assess variability between and within groups, aiding in significance testing.

How do degrees of freedom affect regression analysis?

They impact coefficient precision and model fit, decreasing as predictors increase.

What are effective degrees of freedom in non-parametric tests?

They account for data complexity and dependencies, ensuring accurate inference.

How can researchers ensure adequate degrees of freedom?

By meeting sample size requirements and addressing statistical test assumptions.

What are the limitations of degrees of freedom?

Assumptions like normality can limit parametric tests, while determining effective degrees of freedom in non-parametric tests can be challenging.

Where can I learn more about degrees of freedom in statistics?

Resources like textbooks, courses, and academic articles offer comprehensive explanations and examples.